Interpolation of Robotic Actions

A novel approach to action combination is proposed by leveraging movement primitives learned by demonstration through Neural Networks. The work introduces a method for generating new (never taught) actions based on demonstrated ones and a new environment.

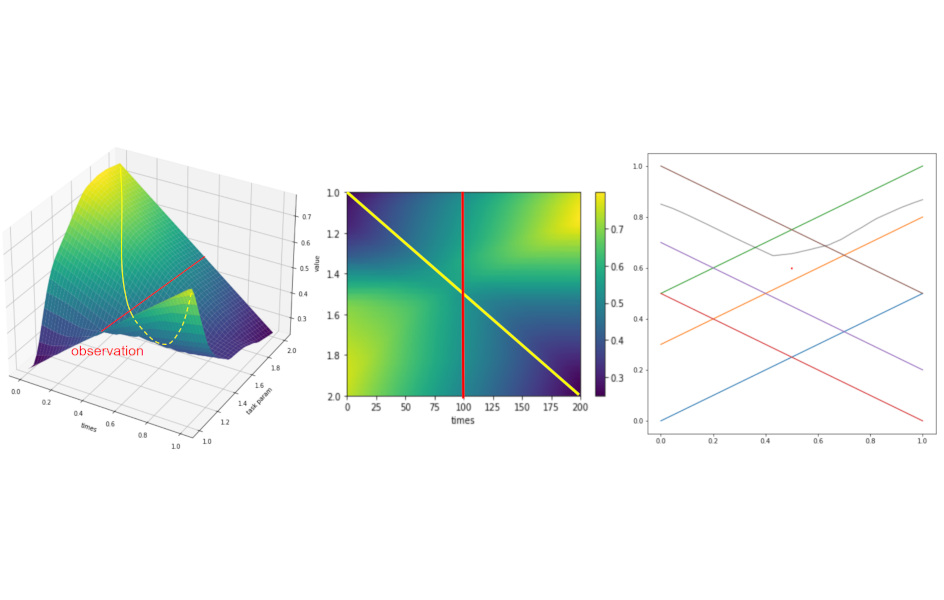

In the research, tasks are blended by utilizing the trajectories interpolation capabilities of the CNMP network and the developed mathematical system for parameterization.

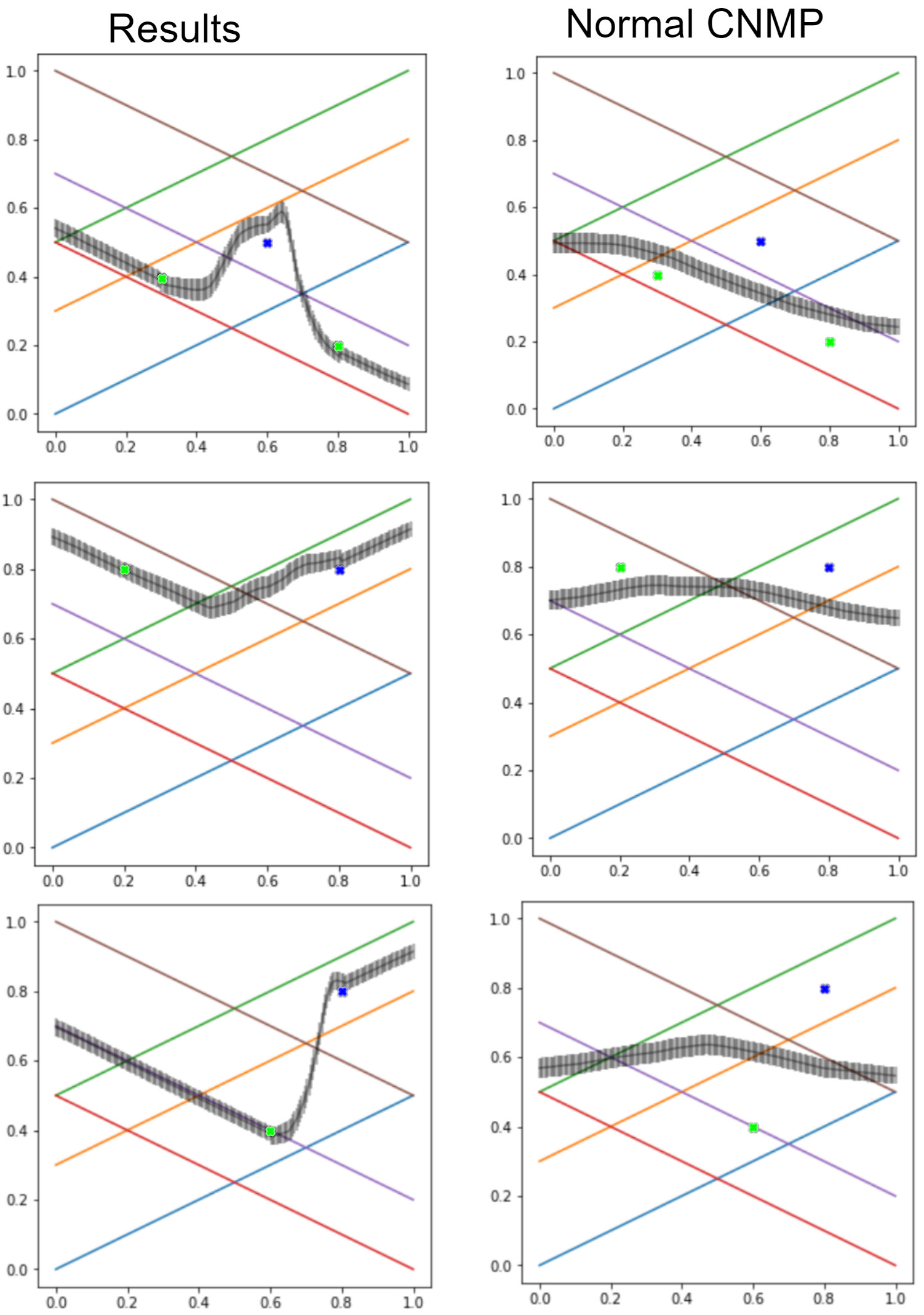

The result is the generation of new unseen trajectories adapted to the environment and the different tasks requested.

Simple demonstration

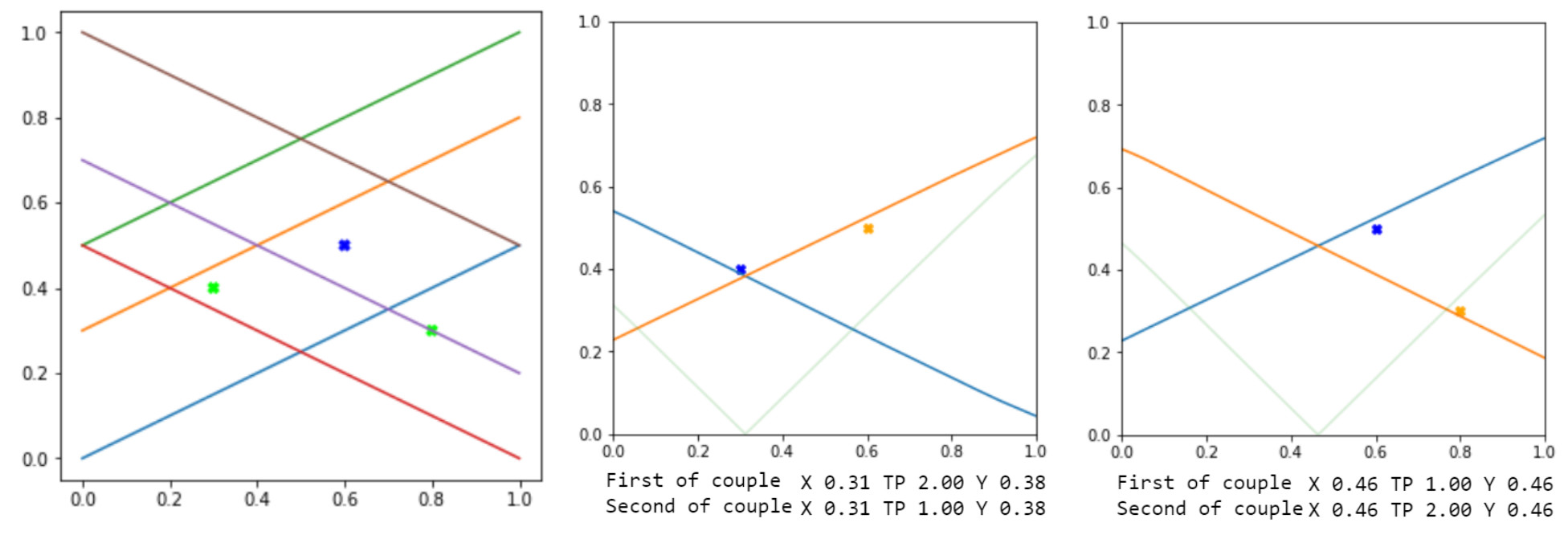

A simple demonstration on how the method enances neural networks capabilities to change task mid-execution (even multiple times) maintaining coherence (temporal and spatial).

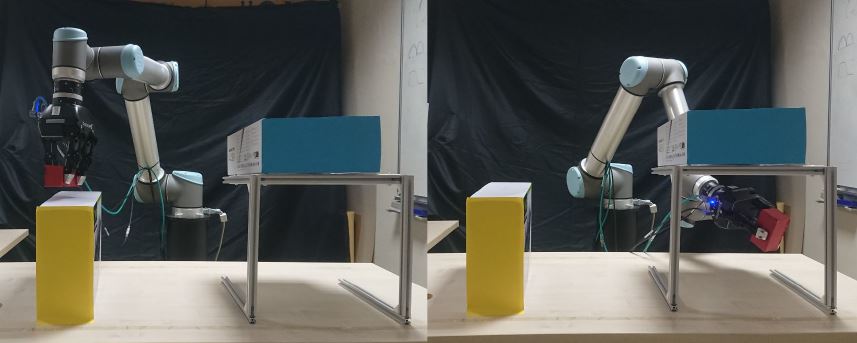

Testing

The model is tested on the UR10 Robot. Different actions are taught by demonstration, and the network is trained on those skills. Below an example of two actinos taught.

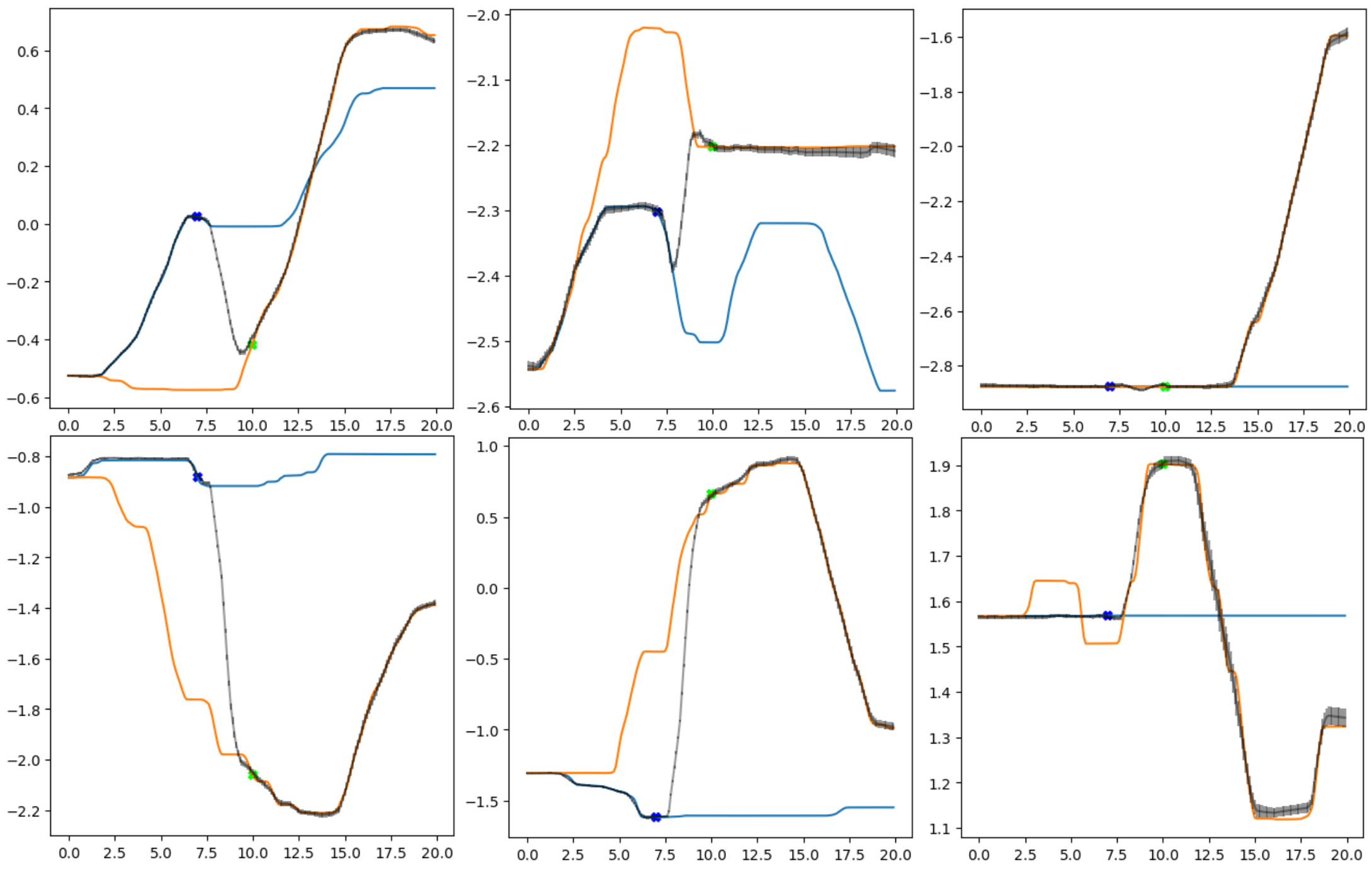

Subsequently, the network is conditioned with different tasks in the same run. The results of the method are shown below.

The results are reproduced in the robot, and the two actions are correctly blended to adapt to the mixed environment.