Synthesis of Robotic Actions

A novel approach to action synthesis is proposed by leveraging movement primitives learned through neural networks models. The work introduces a method for adapting actions to the environment based on demonstrated ones, then combining them in the correct order to achieve different goals.

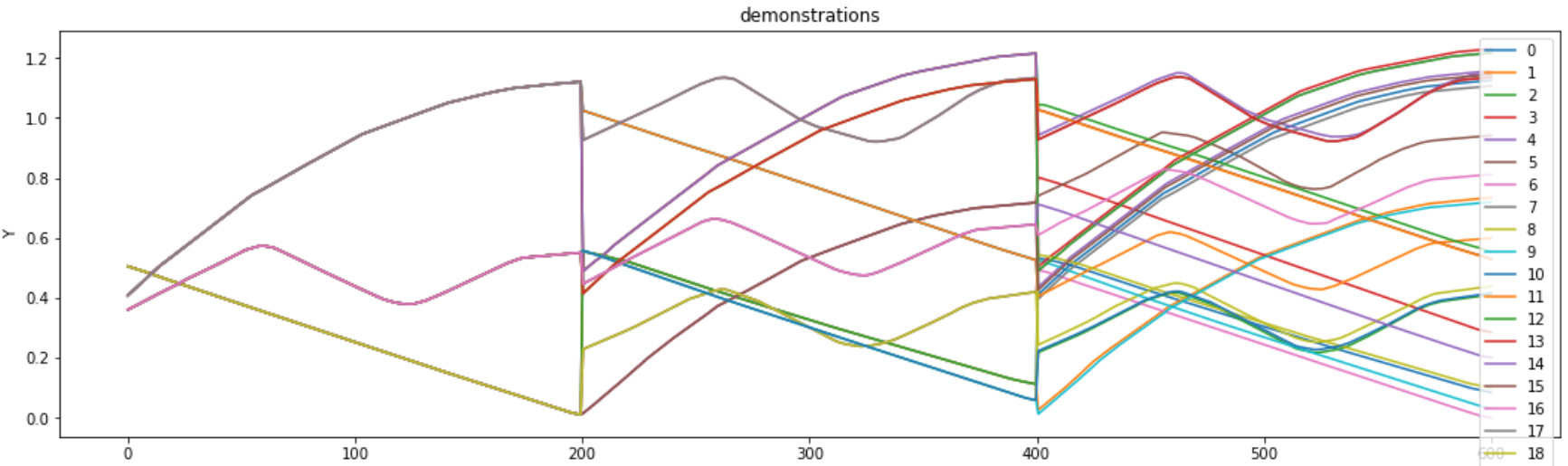

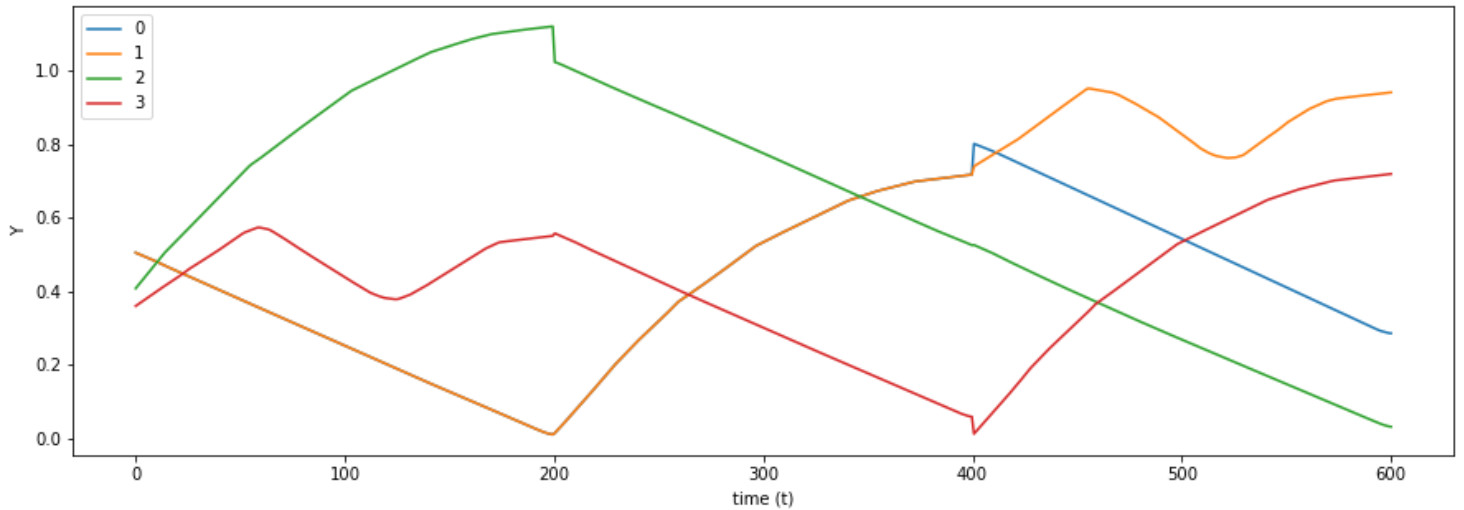

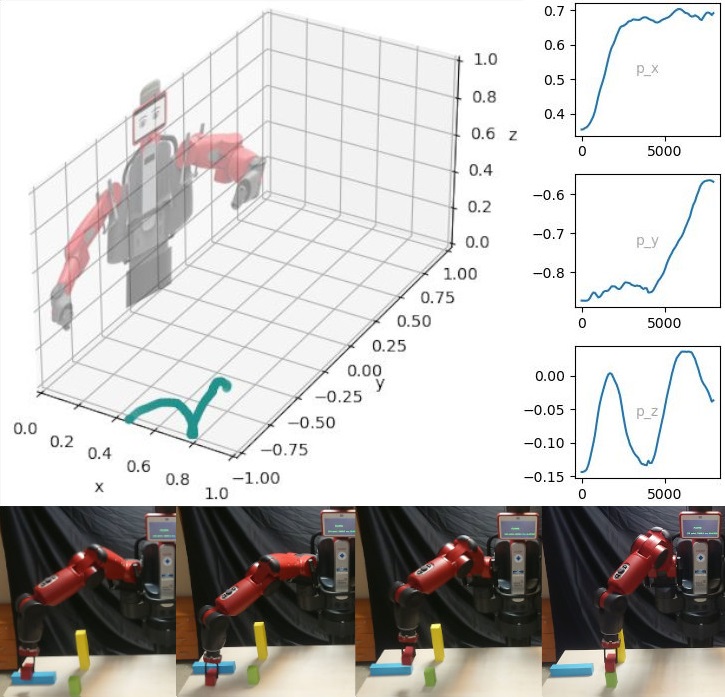

In the research, action synthesis is achieved through the concatenation of basic primitives, spatial interpolation, and the network’s ability to encode multidimensional data to embed the environment representation.

The result is the generation of a long-term sequence of actions adapted to the environment to achieve different tasks requested.

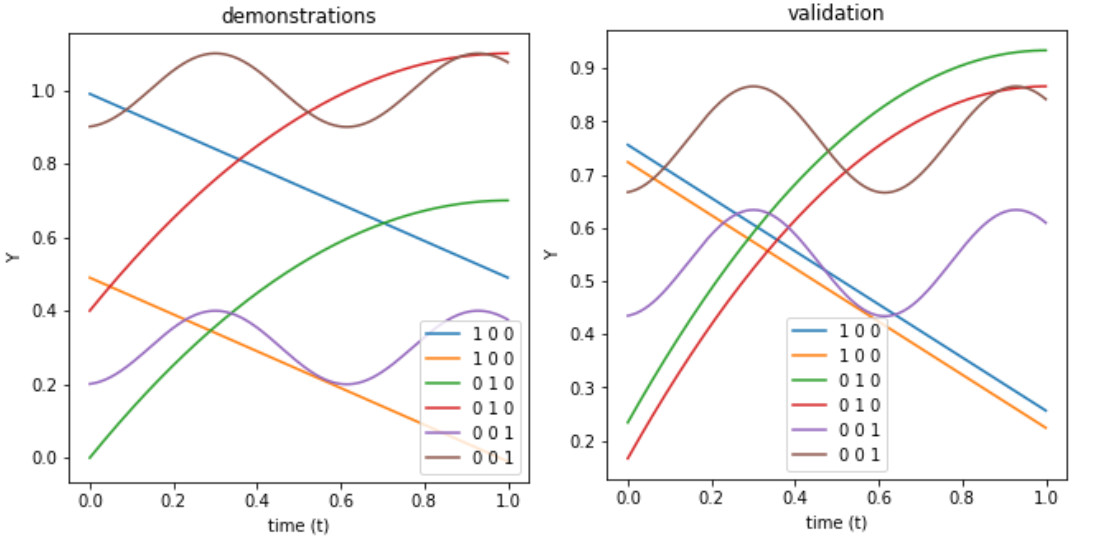

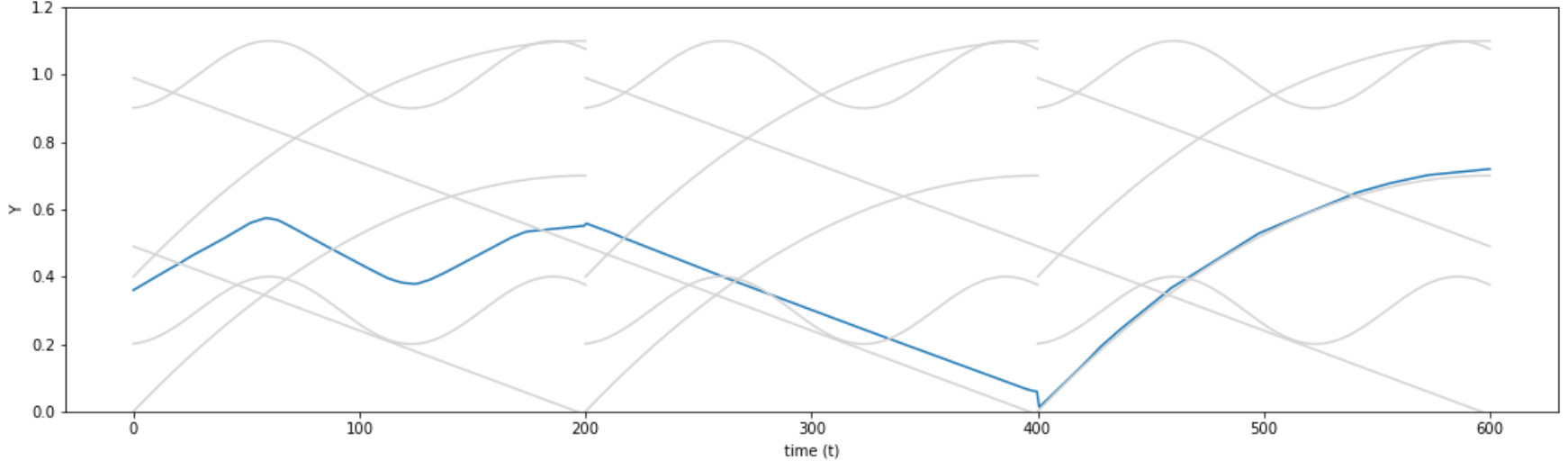

In simple terms, the model is trained with different actions. It is possible to condition an action generated to a certain point in time (eg. where to start, end, or pass)

Given a starting point, all actions possible are computed from this state. The network adapts to the current state from the previously taught actions. Subsequently, all actions possible are computed from the final states of these actions.

The tree of possibilities is filtered when the actions fail to meet a criteria.

The correct sequence that reaches the desired goal is identified and executed.

Testing

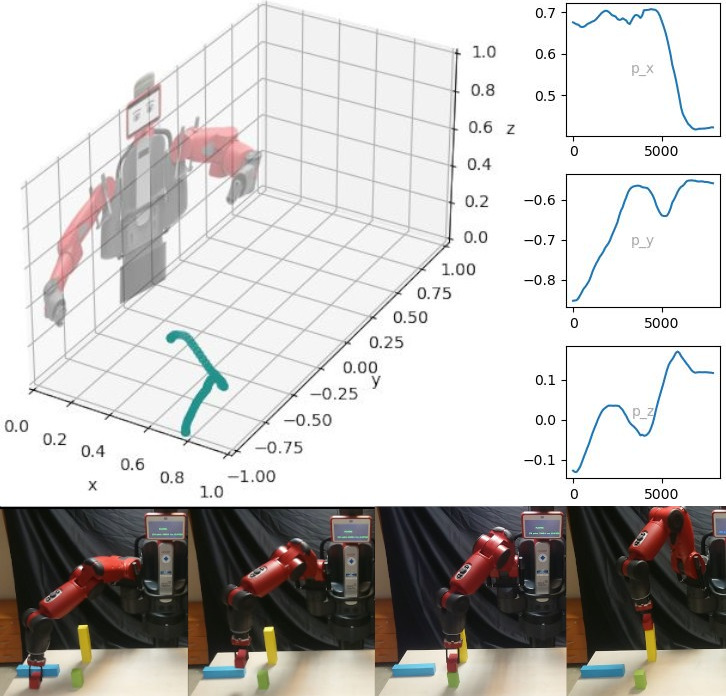

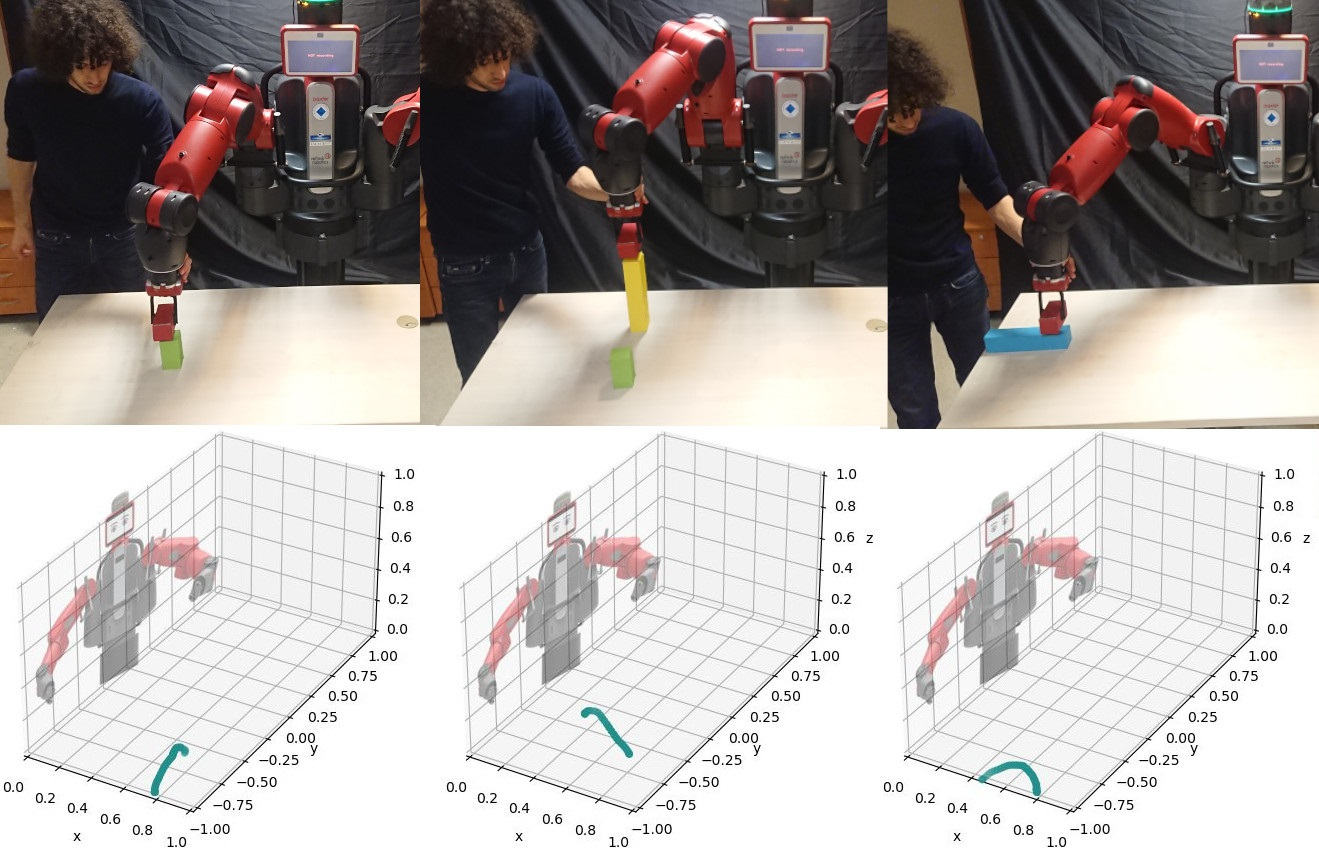

The model is tested on the Baxter Robot. Different actions are taught by demonstration and the Conditional Neural Motion Planning (CNMP) network is trained on those skills.

Subsequently, the method adapts them spatially to the environment and finds the correct sequence to complete the goal. The sequence was never explicitly taught by the expert.

The method allows the flexibility of achieving different goals (an exponential number of them) using the base knowledge of actions learned.